WAI-Tools Documentation of Pilot Monitoring

As a part of the WAI-Tools project, the Norwegian Digitalisation Agency has performed a pilot monitoring on a sample of four public sector bodies and their websites based on the requirements for monitoring as referred to in the Directive (EU) 2016/2102.

1. Introduction

Directive (EU) 2016/2102 aims to ensure that the websites and mobile applications of public sector bodies are made more accessible on the basis of common accessibility requirements.

The Member States shall ensure that public sector bodies take the necessary measures to make their websites and mobile applications more accessible by making them perceivable, operable, understandable, and robust.

Content of websites and mobile applications that fulfils the relevant requirements of European standard EN 301 549 V2.1.2 or parts thereof, shall be presumed to be in conformity with the accessibility requirements. According to EN 301 549, conformance with the web requirements is equivalent to conforming with the Web Content Accessibility Guidelines (WCAG) 2.1 Level AA. An accessibility statement should be provided by public sector bodies on the compliance of their websites and mobile applications with the accessibility requirements laid down by the Directive.

Conformity with the accessibility requirements set out in the Directive, should be periodically monitored. The Member States shall apply

- an in-depth monitoring method that thoroughly verifies whether a website or mobile application satisfies all the requirements identified in the standards and technical specifications

- a simplified monitoring method to websites that detects instances of non-compliance with a sub-set of the requirements in the standards and technical specifications

referred to in Article 6 of Directive (EU) 2016/2102.

WAI-Tools, Advanced Decision Support Tools for Scalable Web Accessibility Assessments, is an Innovation Action project, co-funded by the European Commission (EC) under the Horizon 2020 program (Grant Agreement 780057). The project started on 1 November 2017 for a duration of three years.

The project is closely linked to European and international efforts on web accessibility standardisation, including the Web Content Accessibility Guidelines (WCAG) 2.1. It is also highly relevant considering the monitoring methodology referred to in the Directive.

The project partners are:

- European Research Consortium for Informatics and Mathematics (ERCIM), European host for World Wide Web Consortium (W3C)

- Siteimprove, Denmark

- Accessibility Foundation, Netherlands

- Administrative Modernization Agency, Portugal

- University of Lisbon, Portugal

- Deque Research, Netherlands

- The Norwegian Digitalisation Agency, Norway

Some of the objectives of the project are to:

- build a common set of Web Accessibility Conformance Test (ACT) Rules from W3C, to provide an interpretation for evaluation tools and methodologies based on the W3C Web Content Accessibility Guidelines (WCAG).

- help increase the level of automation in web accessibility evaluation through defining test rules and bleeding-edge technologies which are increasingly available today.

The ACT Rules formally published by W3C provide authoritative checks for the WCAG 2.1 Success Criteria. Through a community of contributors, the aims are to reduce differing interpretations of WCAG, make test procedures interchangeable , and develop a library of commonly accepted rules. The ACT Rules also leads to the development of automated and semi-automated testing tools, which can be used by monitoring bodies to carry out the monitoring more efficiently and effectively.

At the time of writing this report, there are 5 such ACT Rules formally published by W3C, with several more developed by the WAI-Tools Project that are in the process of approval and publication. The WAI-Tools Project aims to develop 70 test rules through an open community process and contribute them to the W3C process for approval and publication. The ACT Rules developed by the project are implemented in open-source engines developed and maintained by the project partners Deque, FCID (University of Lisbon), and Siteimprove.

As a part of WAI-Tools Work Package 2 in the project, the Norwegian Digitalisation Agency has performed a pilot monitoring, based on the requirements for monitoring as referred to in the Directive. The pilot is built upon an analysis of the requirements in the Directive and encompasses both the simplified and the in-depth monitoring process.

To perform this pilot monitoring, the Norwegian Digitalisation Agency has used the draft ACT Rule implementations developed by Deque, FCID, and Siteimprove available at the time, to test a limited number of websites. Mobile applications and downloadable documents were not tested in the pilot.

The outcomes of this effort, combined with the documentation of the ACT rules developed in WAI-Tools, form a “demonstrator” from Norway as required by the project. The pilot has provided valuable experience and documentation to be used in further preparations for monitoring.

Preparations for the pilot started in September 2019 and the pilot was concluded in March 2020. The project partners have contributed to the report with valuable input. Their comments and amendments are included in the final report.

2. Summary

In this chapter, we present a summary of the pilot monitoring effort. The objective of the pilot has been to explore and try out all the steps of the monitoring process, based on a sample of four public sector bodies and websites, within the timeframe of the pilot.

The focus has not been to produce test data and other data in the amount needed for analysis and reporting in line with the Directive, but rather to gain experience and identify which issues that need to be followed up in order to be prepared for real monitoring.

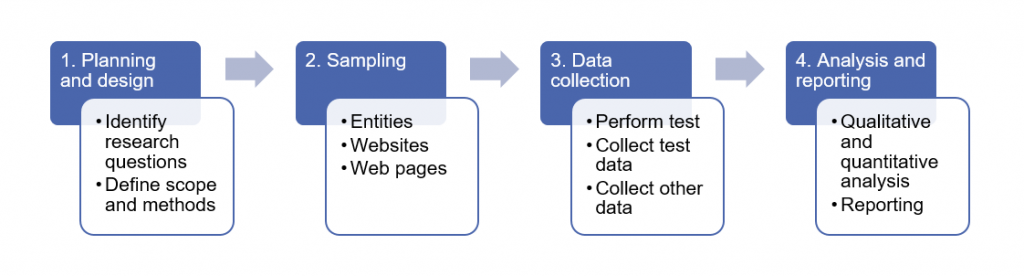

The monitoring process may be regarded as a sample survey that consists of the following steps:

- Planning and design

- What are the requirements in the Directive for monitoring and reporting?

- What issues and research questions should be investigated?

- What data do we need to cover the research questions and perform reporting?

- Which requirements in the standard are relevant to include in the monitoring?

- Sampling

- How can we select a sample of entities, websites, and test pages?

- Data Collection, including test

- What experiences did we gain in the pilot regarding data sources, methods, and tools for collecting data?

- Analysis and reporting

- What did we experience in the pilot in our effort to establish a dataset suitable for analysis and reporting?

In the following, we summarize the findings and learning points from each phase or step in the monitoring process. The learning points are also presented at the end of the respective chapters.

2.1 Step 1: Planning and design

Planning the monitoring is essential, this applies especially for the first couple of times and for the first reporting to the EU. We must decide on which issues and questions that should be investigated in the monitoring, in what way, based on the requirements for monitoring and reporting in the Directive.

Therefore, we performed an analysis of the requirements for monitoring and reporting in the Directive as described in chapter 4.1. Based on the documentation of the requirements, we have derived the amount and composition of the sample, and also which data we must collect through the monitoring process, in order to perform the necessary analysis and reporting, as described in chapter 4.2.

Through the planning process, we also elected what Success Criteria to include. In the pilot, this was limited by the partially incomplete implementation status of the tools per February 2020.

In real monitoring, we will, in addition to what is implemented in tools chosen for test, also take into consideration experiences from earlier monitoring efforts, and probably also do semi-automated and manual tests in order to cover requirements with high risk for non-compliance. This applies, especially for simplified monitoring. For in-depth monitoring, all the requirements will be included.

Findings and learning points:

- It is crucial to analyse the Directive to identify requirements for monitoring and reporting.

- Before starting the test and other data collection, we must define, as precisely as possible, which research questions to be investigated, and then ensure that we collect all the data needed for analysis and reporting. The research questions are listed in chapter 4.1.6.

- The requirements for monitoring, reporting, and the list of research questions underlie decisions on the following:

- The sample of public sector bodies/entities, web solutions, test objects/pages, etc. This applies to the size and composition of the sample, and the selection method for entities, web solutions, and pages.

- The monitoring methods, tools, and test mode (automated, semi-automated, manual)

- For simplified monitoring: which Success Criteria shall be included, especially considering that automated test is preferable.

- Data needs, the methods and for data collection and the data sources

- Which analysis that must be performed in order to report in alignment with the Directive

- In this pilot:

- We used 19 ACT rules that covered 13 WCAG 2.1 Success Criteria. In our opinion, it is of vital importance that this work proceeds until all the accessibility requirements in the Directive are covered. This is due to the need for a documented, transparent, and commonly accepted test method.

- We met the requirements for simplified monitoring by covering the 4 principles in WCAG as well as 7 of 9 user accessibility needs

- Since we used the same ACT Rules and Success Criteria in both simplified and in-depth monitoring, we covered 29 % of the Success Criteria required by the Directive (for the in-depth monitoring).

- Even though we had a somewhat limited scope regarding the number of requirements included, we came close to meet the minimum requirements for simplified monitoring. However, based on findings in previous monitoring in Norway, there were several high-risk Success Criteria that were not covered in the pilot.

- This was because neither the development of test rules nor the implementation of test rules were completed when the pilot testing was performed. High-risk Success Criteria are continuously being addressed in the project.

- Regardless of this, one should be aware of that the election (and exclusion) of Success Criteria in the monitoring, may imply that there could be significant accessibility problems that are not uncovered in the monitoring. This applies, especially for the simplified monitoring.

- In the foreseeable future, we consider that there will be a need to supplement automated tests with both semi-automatic and manual tests to cover all the Success Criteria and requirements in the standard. This applies, especially for the in-depth monitoring.

Especially when planning the first monitoring and reporting:

- There is a comprehensive need to collect and store data in both simplified and in-depth monitoring, as described in chapter 4.2. The data must be collected from diverse data sources. We need to establish a data model to structure the data, in order to facilitate efficient data storage and retrieval. This data model is to some extent built on the open data format for accessibility test results that was developed by the project and implemented in the tools as one of the output formats.

- We plan to use the accessibility statements to collect structured data about the public sector bodies, web solutions, area of services, and individual services per entity. Later, we will consider combining the accessibility statements with automated tests that the entities can perform themselves. Part of the WAI-Tools project is to develop a prototype large-scale data browser, which would collect and analyse data from accessibility statements. However, this was not available at the time of carrying out the pilot and may be considered in the future.

- However, it should be considered whether the requirements for monitoring and reporting are too extensive, especially when it comes to data needed to compose the samples of entities, web solutions, and pages, in line with the Directive (as described in Chapter 4.2.1-4.2.3). This applies in particular to the amount of services, processes, pages, and documents that shall be tested in the in-depth monitoring. Maybe a limited sample of services may be enough, instead of monitoring them all.

In addition, we have identified a short-list of criteria that should be considered when selecting a tool in real monitoring:

- The coverage of WCAG, i.e. the tool should cover as many requirements/Success Criteria as possible

- The tool should as far as possible secure the needs for transparency, reproducibility, and comparability, thus

- it must be based on a documented interpretation of each of the requirements in the standard.

- as far as possible, be based on the ACT Rules, as they meet the need for a documented, transparent, and commonly accepted test method.

- the test rules (and the way they are implemented in the tools) must be documented in order to show what interpretation of the requirements that are covered by each test.

- the tool should include or be combined with a crawler that is suitable for sampling most of the pages and content that should be included in the monitoring.

- the test results should specify the outcome of the tests like passed, failed, inapplicable (and not tested).

- the tool should preferably give test results both on the page and the element level, specified per success criteria.

- the number of tested elements and pages especially failed elements and pages should be counted and identified, per success criteria and in total.

- the test results should be in a format suitable for analysis and reporting in line with the Directive and provide the web site owners/the public sector bodies with the necessary information in their work for improving their websites.

2.2 Step 2: sampling

In this chapter, we summarize the findings and learning points from the sampling of public sector bodies/entities, websites, and pages.

Sampling of public sector bodies/entities:

- We will benefit from developing an efficient method for establishing a representative sample in line with the Directive. This will hopefully contribute to the reliability and social significance of the analysis on accessibility barriers uncovered by the monitoring.

- A representative sample will allow us to generalize the monitoring results and establish a national indicator on the degree of compliance with the accessibility requirements set out in the Directive, and overall accessibility indicator for websites. This may also be suitable for bench-marking purposes.

- In order to select a representative sample, we need information about the population of public sector bodies. For this purpose, The Norwegian Register of Legal Entities (or similar) can be used for drawing a sample of public sector bodies.

- The classifications of the institutional sector and industry as listed in the register can be useful to determine the level of administration (state, regional, local, body governed by public law). Based on a combination of the classifications of the institutional sector and industry, we can also get a brief indication of the area of service.

- There is great potential in more automation, for instance by drawing (as far as possible) a random sample from The Norwegian Register of Legal Entities (or similar), based on specifications of the criteria mentioned in the Directive.

Sampling of websites:

- There is no register in Norway that is suitable for drawing a sample of websites. Thus, the total website population is unknown. To locate and draw a sample of websites that fits 100 percent with the criteria in the Directive, we need to collect data that shows which web solutions belong to each selected entity.

- Some entities have more than one website. In these cases, we need to determine which website to include in the monitoring. On the other hand, some entities share web solutions with others, and in those cases, we need to identify who is responsible. In the pilot, we needed to combine information from the register with online searching for public bodies’ websites. This is time-consuming.

- A combination of data from the Norwegian Register of Legal Entities and data input from the entities through the accessibility statements might be an efficient method. We can use the statements to collect structured data on the level of administration, area of service, which websites (and mobile applications) that are relevant for monitoring, etc.

- Still, there are important questions concerning the sampling requirements that are not specified in the Directive, such as:

- Whether or not the sample for simplified and in-depth must be selected in separate operations.

- Whether the samples for respectively simplified and in-depth monitoring, shall both aim for a diverse, representative, and geographically balanced distribution.

- Whether the in-depth monitoring can be based on a selection of the websites in the simplified monitoring.

It would be very helpful if these above-mentioned matters could be clarified.

Sampling of pages (and documents) - in-depth monitoring:

- It should be considered whether the requirements for the sample of test pages are too extensive. The sampling of web pages is a complex and time-consuming task that requires manual effort. It might be considered to establish a dialogue with the website owners for the sampling of pages and documents. However, we must take to consider whether this is cost-efficient.

- The terms “Type of service” and “Process” should be defined, in order to avoid a random approach when identifying services and processes. In real monitoring, this also applies to downloadable documents, as the Directive requires testing of at least one relevant downloadable document, where applicable, for each type of service provided by the website.

- If the sampled pages in in-depth monitoring are part of a process, the Directive requires that all steps in the process are monitored. In our experience, processes may require log-in using a national ID number. Therefore, it is crucial that we establish a method for acquiring log-in access to these processes/web pages.

Sampling of pages (and documents) - simplified monitoring:

- We need a scale or criteria for assessing what will be a suitable number of test pages, based on the estimated size and the complexity of the website that shall be monitored.

- For simplified monitoring, we need access to a crawler to sample web pages. However, the crawler in the different tools can use different methods to crawl the websites. One should, therefore, be aware that this can cause the tools to sample different pages on the same website.

- Crawling is also suitable to estimate the size of a website. But in our experience, the crawlers did not find (and count) hidden pages, subdomains, or pages that require log-in.

- In a lack of adequate alternatives, we still consider using a crawler to sample web pages (and estimate the size) to be the most efficient method.

Sampling of pages (and documents) - both in-depth and simplified:

- We need a method to exclude pages that include third party content and other content exempt from the Directive.

- We need to explore whether it is possible to test web pages that require log-in, using automated tools. This applies primarily to in-depth monitoring, but it is desirable to be able to cover this type of content in simplified monitoring as well.

2.3 Step: Data collection

This step covers both the production of test data and other data needed for monitoring and reporting. The most important findings and learning points are listed.

Data about the entities:

- We managed to collect the data about the public sector bodies as listed in chapter 4.2.1. Most data could be downloaded from the Norwegian Register of Legal Entities (or similar).

- The combination of institutional sector and industrial classification may be enough to determine the type of service offered by the entity (through their website), especially for simplified monitoring. For in-depth monitoring, this may be insufficient.

- In many cases, we will need to do a more comprehensive check of the actual content on the entity’s website. This might be very time consuming and the quality of the data could be insufficient. It should be considered to use the accessibility statements as a data source for this purpose. This could be far more cost-effective than manual inspections. Part of the WAI-Tools project is to develop a prototype large-scale data browser, which would collect and analyse data from accessibility statements. This could be considered for future exploration.

Data about the websites:

- In the pilot, we combined data from the Norwegian Register of Legal Entities with searching on the internet in order to locate the selected public sector bodies` website URLs. It should be considered to make it mandatory to report the web solution addresses to the register, and/or arrange the accessibility statements so that this data could be reported directly to the monitoring body

- In some cases, data about the area of service can be registered at the entity level. In other cases where the entity offers a wide scope of services, and in addition has different websites for different kinds of services, the area of service must be defined at the web solution level. This applies for example to the Norwegian Digitalisation Agency.

- Some entities/websites offer a wide array of services. In the pilot, we only sampled a few services from the website we monitored in-depth. In a real monitoring we will need an overview of all the services offered by an entity/through a website, as the Directive requires that at least one relevant page for each type of service provided by the website to be monitored. In consequence, we need to collect extensive information about the various services offered by the entity/website. Therefore, it should be reviewed to what extent this is cost-effective and whether it rather should be possible to monitor a sample of services, instead of including them all.

- Identifying the services offered on a website is challenging. As a rule of thumb, municipal websites will in most cases have a more standardized (statutory) set of service offerings. This will also be the case for many of the regional websites. For the state websites and those belonging to bodies governed by public law, there are most likely wide variations, both in scope, complexity, and in terms of what services they offer. One should consider using the web accessibility statements to gather this kind of information, possibly supplemented by dialogue with the public sector bodies if further information is needed.

Data about pages:

- For simplified monitoring, it is only the homepage that needs to be identified (and documented). For the in-depth, there is a need for more data about the pages, than in the simplified.

- We managed to identify and register data about the web pages that are required for the in-depth monitoring, given they were present at the website.

- For assessing whether a page is a part of a process, and for checking out that we sampled the pages listed in the Implementing Decision, we had to inspect the website manually. That was the only way we managed to collect the data about the web pages, as listed in chapter 4.2.3.

- The process was time-consuming and relied on participation from the website owner. In a real monitoring, it might be considered too extensive to have dialogues with the website owners in order to collect data about the sampled web pages. Therefore, it might be useful to review whether it is necessary to sample (and document) the test pages as detailed as described in the Implementing Decision.

- Either way, we need information/data that connects the pages to services and processes. Therefore, the terms “Type of service” and “Process” should be defined.

Data about the requirement:

- Collecting data about the requirements was done by consulting the EN standard.

- More specifically, information about which user accessibility needs that correspond with each requirement/Success Criteria, is to be found in the standard EN 301 549, Annex B.1. This data helps us in an analysis of what digital barriers users with different user accessibility needs meet on the internet.

Data about the test results:

- Using the three tools provided by the project partners, we managed to collect test results at the page level per Success Criterion for the in-depth monitoring, and individual test results per Success Criterion, for both the in-depth and the simplified monitoring.

- We used two of the three tools in the simplified monitoring. We did not manage to collect test results at the page level since we were not able to establish a model for converting test results from the test rule level to the Success Criterion level. The method used for this purpose in the in-depth monitoring, was not feasible for the simplified monitoring, due to the huge number of pages tested (up to 1 000 pages per website).

- All the three tools reported test results in the category “failed”, while one of the three also reported results in the categories “passed” and “inapplicable”. In our opinion, it is preferable to have data about the test results that cover all the three categories “passed”, “failed” and “not applicable”.

- For two of the tools, it was also difficult to ascertain whether a web page had been tested.

- It was challenging to get the hold of the number of unique failed pages per Success Criterion, by using the tools in their current status. In a real monitoring, we need to extract test results at the Success Criterion level. A solution could be to arrange the export functions from the tools so that we could retrieve results for unique pages per Success Criterion directly.

- We spent a significant manual effort to extract and present the data. We also struggled with exporting test data from the tools into another format, suitable for distribution to the website owners. In addition, we also need a data format that is suitable for further analysis.

- If we have had the opportunity to dig deeper into these issues within the timeline of the pilot, it is possible that the tool vendors could have assisted us in producing and converting test results more efficiently. This data model is to some extent built on the open data format for accessibility test results that was developed by the project and implemented in the tools as one of the output formats.

Note: It is important to emphasize that if we had had the opportunity to dig deeper into these issues during the pilot, the project partners responsible for the tools most likely could have helped us in producing, exporting, and converting test results more efficiently.

Data about the test results – additional comments:

- Documentation of test methods, tools (and version), is essential to secure transparency, reproducibility, and comparability. In general, it is crucial to investigate the documentation of the tools in order to be in control of what is tested and how the tests are performed.

- Given that this pilot was carried out during active project development, it was challenging to determine which version of an ACT Rule was implemented in each of the three tools, as only information about when each ACT Rule was last updated is available on the ACT Rules website. The ACT Rules website also has an overview of the different implementations of the ACT Rules, but it is not specified which version or when the various ACT Rules were implemented in the tools. We expect this situation may improve as ACT Rules get formally published by W3C, and implementations of these stabilize.

Data about the monitoring and reporting:

- Data about monitoring and reporting can easily be collected from the planning and documentation of the monitoring. This data is among other things, information about the monitoring period, the body in charge of the monitoring, etc. A complete list is shown in the table in chapter 6.6

2.4 Step 4: Analysis and reporting

It was not within the scope of the pilot to produce test data in the amount needed for performing analysis and reporting as described in the Directive. Therefore, a summary presents our experiences in establishing a data set suitable for analysis and reporting. We will also present our thoughts and reflections regarding the calculation of compliance level and other issues which in our opinion need clarification.

The findings are summarized below:

- Regarding the questions of the

- sample of entities and websites

- sampling method

- Success Criteria and test methods

- user accessibility needs

the data collected in the pilot are assessed to be sufficient and suitable for performing the analysis needed for reporting.

- Together with the test results, the data about the monitoring are crucial for answering the research questions.

Further reflections about analysis and reporting:

- We need a documented method for the sampling of entities and websites and, as far as possible, an overview of the population of entities and websites. This is to form a basis of assessing to what extent the monitoring results can be generalized. We also need a consistent way of sampling test pages. This is crucial both for comparing results between websites, the categories of public sector bodies, and when comparing results from different monitoring periods.

- Based on the requirements for reporting, the monitoring bodies need a method and a scale to express quantified results of the monitoring activity, included quantitative information about the level of accessibility.

- The quantified test results per Success Criterion and the mapping to the user accessibility needs, form the basis for a qualitative analysis of the outcome of the monitoring, especially the findings regarding frequent or critical non-compliance. Thus, we need a method for performing the qualitive analysis as described in the Directive and a template for reporting to the EU.

- There is also a need for a clarification of the term “compliance level” (or compliance status). The monitoring bodies need a (simple) method and a scale to express quantified results of the monitoring activity, included quantitative information about the level of accessibility.

- Due to the standard, the basis for calculating the level of compliance are test results at the page level. Thus, we need a way to extract aggregated test data directly from the tools, that shows both the number of tested pages and the number of unique pages that fails on each Success Criterion. This applies for both the simplified and the in-depth monitoring.

- Due to the standard, we may calculate the compliance level as the percentage of the tested pages that fully complies with all the Success Criteria included, specified by in-depth and simplified monitoring.

- On the other hand, calculating the level of compliance at the element level in a simple way, will give us a more nuanced picture of compliance status. An example:

- count number of tested elements per identified Success Criterion, specified by the outcome of each tested element (passed, failed, inapplicable and perhaps, not tested)

- calculate the compliance level as the percentage of tested elements that comply with the requirements. This may also facilitate benchmarking and measurement of trends in level of compliance.

- Based on the compliance level at the website level, the average or aggregated compliance status for all the websites in each monitoring, specified by in-depth and simplified monitoring could be calculated.

- Similar calculations should also be made

- per category (level of administration) of public sector bodies

- per Success Criterion

- Since there are multiple ways of calculating the compliance status, there is a need for a clarification of the term “compliance” and how it should be measured.

- There is also a need for reporting test results that identifies which elements on the tested pages that are not in compliance. That is for the website owners to supported in their efforts for correcting failed elements.

3. Objectives of the pilot

Member States shall monitor the compliance of websites and mobile applications of public sector bodies with the accessibility requirements provided for in Article 4 of the Directive, on the basis of the methodology set out in the Commission Implementing Decision, on the grounds of requirements identified in the standards and technical specifications referred to in Article 6 of the Directive.

The Directive includes requirements regarding

- sampling of websites and mobile applications to be monitored.

- which types of webpages and documents to be monitored in-depth.

- presentation and reporting of the monitoring results.

- providing the results to the public sector bodies responsible for the solutions that have been monitored.

The objective of the pilot is to gain experience with the entire monitoring process as described in the Directive and Implementing Decision. The goal is not to solve all the issues encountered in the various steps of the pilot monitoring, but rather to identify and address what will need follow-up in order to be prepared for the monitoring.

The monitoring process may be regarded as a sample survey that consists of the following steps:

Based on such an understanding of the monitoring process, we have defined the following questions to be investigated in the pilot:

- What are the requirements in the Directive for the simplified monitoring, the in-depth monitoring, and the reporting?

- What issues and research questions should be investigated in a monitoring in general?

- What data do we need to cover the research questions and perform reporting in line with the Directive?

- Which requirements in the standard are relevant to include in the monitoring, based on the assumption that testing shall be done using automated testing methods/tools?

- How can we select a sample of entities, websites, and test pages?

- What experiences did we gain in the pilot regarding data sources, methods, and tools for collecting data?

- What did we experience in the pilot in our effort to establish a data set suitable for analysis and reporting?

The findings are summarized in learning points for further follow-up in our preparations for monitoring in line with the Directive.

The pilot was primarily focused on gathering experience with the steps in the monitoring process, as presented in Figure 1. Therefore, there was little emphasis on producing data to an extent that will be necessary to perform statistical analysis.

In the following chapters, the steps in the monitoring process are elaborated. We then summarize the key findings and learning points from the pilot for each step of the monitoring process.

4. Step 1: Planning and design of the pilot monitoring

Planning the monitoring is essential, this applies especially for the first couple of times and for the first reporting to the EU. Through the planning process we decide on the following:

- Which issues and questions that should be investigated in the monitoring. This is largely determined by the requirements for monitoring and reporting in the Directive. This is further determined by which requirements/Success Criteria we include in the monitoring.

- The size and composition of the sample of entities and web solutions included in the monitoring. This also follows from the Directive.

- What data we need to answer the questions that underlie the monitoring. This will be investigated based on the bullet points above.

- Which data sources, methods, and tools that are the most suitable for collecting data.

- What analysis must be done to report in accordance with the requirements of the Directive

4.1. Requirements for monitoring and reporting in the Directive

The Member States shall monitor the compliance of websites and mobile applications of public sector bodies with the accessibility requirements provided for in Article 4 of the Directive on the basis of the methodology set out in the Commission Implementing Decision, on the grounds of requirements identified in the standards and technical specifications referred to in Article 6 of the Directive.

4.1.1. Monitoring methods

The Member States shall monitor the conformity of websites and mobile applications of public sector bodies using:

- an in-depth monitoring method to verify compliance

- a simplified monitoring method to detect non-compliance

The in-depth monitoring shall

- thoroughly verify whether a website or mobile application satisfies all the requirements identified in the standards and technical specifications referred to in Directive (EU) 2016/2102 Article 6.

- verify all the steps of the processes in the sample, following at least the default sequence for completing the process.

- evaluate at least the interaction with forms, interface controls and dialogue boxes, the confirmations for data entry, the error messages and other feedback resulting from user interaction when possible, as well as the behavior of the website or mobile application when applying different settings or preferences.

The simplified monitoring method shall

- detect instances of non-compliance with a sub-set of the requirements in the standards and technical specifications referred to in the Directive.

- include tests related to each of the requirements of perceivability, operability, understandability, and robustness

In addition, the simplified monitoring method shall

- inspect the websites for non-compliance.

- aim to cover the following user accessibility needs to the maximum extent it is reasonably possible with the use of automated tests:

- usage without vision

- usage with limited vision

- usage without perception of colour

- usage without hearing

- usage with limited hearing

- usage without vocal capability

- usage with limited manipulation or strength

- the need to minimise photosensitive seizure triggers

- usage with limited cognition

4.1.2. Compliance and non-compliance

In simplified monitoring, we shall detect instances of non-compliance, while we in in-depth monitoring shall verify compliance. For definitions of compliance and non-compliance, the Directive refers to the EN 301 549 standard.

The standard states that:

"A page satisfies a WCAG Success Criterion when the Success Criterion does not evaluate to false when applied to the page. This implies that if the Success Criterion puts conditions on a specific feature and that specific feature does not occur in the page, then the page satisfies the Success Criterion."

Thus, for each Success Criterion, the check for compliance or non-compliance happens at the page level. For a web solution to comply with the requirements in the Directive, all tested pages must comply.

Determination of compliance is defined in the following way:

"Compliance is achieved either when the pre-condition is true and the corresponding test [in Annex C in EN 301 549] is passed, or when the pre-condition is false (i.e. the pre-condition is not met or not valid)."

For each of the requirements regarding pages, the pre-conditions and tests are stated as shown in the table below, for all relevant Success Criteria.

Example: 1.1.1 Non-text content

| Type of assessment | Inspection |

|---|---|

| Pre-conditions | 1. The ICT is a page |

| Procedure | 1. Check that the page does not fail WCAG 2.1 Success Criterion 1.1.1 Non-text content |

| Result | Pass: Check 1 is true Fail: Check 1 is false |

This implies that compliance status of a web solution is based on combinations of outcomes/categories of test results. Compliance is when a page have all the test results “passed”, when absence of test results in the category “failed” (i.e. “non-compliance”) and/or test results are in the category “inapplicable” (e.g. the type of content targeted in a test is not present at the actual test page).

It may become challenging to calculate the compliance status of a website based on the definition of the term "compliance" as described in the standard. In our experience from previous monitoring efforts, the number of failed elements on a page, linked to each of the Success Criteria where non-compliance is detected, will give us a more nuanced picture of compliance status. If we in addition get information about how many elements have been tested for each Success Criterion, and the outcome for each tested element, we would be able to calculate the level of compliance in a simple way. This may also facilitate benchmarking and measurement of trends in the level of compliance.

4.1.3. Sampling of public sector bodies and web solutions

The number of websites and mobile applications to be monitored in each monitoring period shall be calculated based on the population of the Member State. The sampling of websites shall aim for a diverse, representative, and geographically balanced distribution. The sample for mobile applications shall aim for a diverse and representative distribution.

Note: In the following, we focus on sampling and monitoring of websites, since websites are the subject of the pilot.

The sample shall cover websites from the following levels of administration:

- state websites

- regional websites (NUTS1, NUTS2, NUTS3)

- local websites (LAU1, LAU2)

- websites of bodies governed by public law not belonging to categories a) to c)

The sample shall include websites representing as much as possible the variety of services provided by the public sector bodies, in particular the following: social protection, health, transport, education, employment and taxes, environmental protection, recreation and culture, housing and community amenities and public order and safety.

The Member States shall consult national stakeholders, in particular organisations representing persons with disabilities, on the composition of the sample of the websites to be monitored and give due consideration to the stakeholders' opinion regarding specific websites to be monitored.

Note: National stakeholders were not consulted in the pilot.

4.1.4. Sampling of pages

The requirements for the monitoring of web pages are specified for each monitoring method. For the in-depth monitoring method, the following pages and documents, if existing, shall be monitored:

- the home, login, sitemap, contact, help and legal information pages

- at least one relevant page for each type of service provided by the website or mobile application and any other primary intended uses of it, including the search functionality

- the pages containing the accessibility statement or policy and the pages containing the feedback mechanism

- examples of pages having a substantially distinct appearance or presenting a different type of content

- at least one relevant downloadable document, where applicable, for each type of service provided by the website or mobile application and any other primary intended uses of it

- any other page deemed relevant by the monitoring body

- randomly selected pages amounting to at least 10 % of the sample established by points a) to f)

The requirements in simplified monitoring are less specific, stating that a number of pages appropriate to the estimated size and the complexity of the website shall be monitored in addition to the home page.

4.1.5. reporting

The Member States shall submit a report to the Commission. The report shall include the outcome of the monitoring relating to the requirements in the standards and technical specifications referred to in Article 6 of the Directive.

The report referred shall contain:

- the detailed description of how the monitoring was conducted

- a mapping, in the form a correlation table, demonstrating how the applied monitoring methods relate to the requirements in the standards and technical specifications referred to in Article 6 of the Directive, including also any significant changes in the methods

- the outcome of the monitoring of each monitoring period, including measurement data

- the information required in Article 8(5) of Directive (EU) 2016/2102

Note: Point d in the above list has not been a part of this pilot.

In their reports, Member States shall provide the information specified in the instructions set out in the Implementing Decision:

- The report shall detail the outcome of the monitoring carried out by the Member State.

- For each monitoring method applied (in-depth and simplified, for websites and mobile applications), the report shall provide the following:

- a comprehensive description of the outcome of the monitoring, including measurement data

- a qualitative analysis of the outcome of the monitoring, including:

- the findings regarding frequent or critical non-compliance with the requirements identified in the standards and technical specifications referred to in Article 6 of Directive (EU) 2016/2102

- where possible, the developments, from one monitoring period to the next, in the overall accessibility of the websites and mobile applications monitored.

’Measurement data’ is:

- The quantified results of the monitoring activity carried out in order to verify the compliance of the websites and mobile applications of public sector bodies with the accessibility requirements set out in Article 4.

- It covers both

- quantitative information about the sample of websites and mobile applications tested (number of websites and applications with, potentially, the number of visitors or users, etc.) and

- quantitative information about the level of accessibility.

The Commission Implementing Decision does also specify optional content for the reporting.

4.1.6. Research questions

Based on the analysis of the Directive, we have identified a set of research questions for further investigation in the monitoring (of websites) that shall be conducted and reported by December 23, 2021. The questions are listed below.

Questions to be answered about the monitoring

- What size and composition of the sample of web solutions (and mobile applications) should be included in the monitoring, both in simplified and in-depth monitoring?

- How are the web solutions selected - and specifically - which solutions are selected in dialogue with stakeholders? For subsequent monitoring: What web solutions have been included in previous monitoring?

- Which Success Criteria are covered in the monitoring and how do they correspond with the principles (perceivable, operable, understandable, and robust) and the User Accessibility Needs listed in the Directive? This applies to the simplified monitoring.

- How do methods, tests, and tools identify non-compliance (simplified monitoring) and verify compliance (in-depth monitoring) with the requirements in the Directive?

Questions to be answered about the results

- What is the overall compliance status with the accessibility requirements in the Directive?

- What is the level of compliance for the websites within each category of public sector bodies? (state, regional, local and bodies governed by public law)

- For subsequent monitoring: How is the development over time when it comes to overall compliance with the requirements of the Directive?

- What is the overall compliance status for each accessibility requirement (Success Criterion)?

- Pay special attention to the Success Criteria where non-compliance is detected and to what extent non-compliance appears

- Pay special attention to what user accessibility needs that are connected to Success Criteria with (frequent) non-compliance

- What is the compliance status for each of the individual web solutions that are monitored?

- The number of test pages with non-compliance should be reported

- The results should also be specified per requirement/Success Criterion), per test page where non-compliance is detected

Note: All the results shall be specified for each monitoring method, simplified and in-depth.

The Directive also mentions the area of service, not as an absolute criterion for sampling, but in order to cover important services directed towards many users. Our interpretation is that it is not intended for the sample to be representative of the variable area of service. However, we should consider that it shall be possible to specify the results for each area, although they are most likely not comparable.

The results should also identify which elements on the tested pages that are not in compliance. That is for the website owners to be supported in their efforts for correcting failed elements.

4.2. Data requisite for reporting

The Directive has detailed requirements for sampling and reporting. The Directive thus forms the basis for what data we consider necessary to collect. In the following, we present what data must be collected about the

- Public sector bodies

- Web solutions

- Test pages (and downloadable documents)

- Success Criterion/requirements included user accessibility needs

- Test results at the page (and element) level

- Arrangements for monitoring and reporting

The different categories of data are summarised in the tables below.

Note: This is an overview only. The tables do not describe the logical structure of a database.

4.2.1. Data about the entity (the public sector body)

| Data Item | Description | Source of data requirement |

|---|---|---|

| Name of entity | Name of entity | |

| Organisation number | Number from the Norwegian Register of Legal Entities | |

| Address (geographic location) | Full address, detailing geographic location of the entity | Commission Implementing Decision (EU) 2018/1524, Annex I (2.2.1) |

| Classification of institutional sector | Number and description from the Norwegian Register of Legal Entities | It is not required by the Directive to collect this data, but the Classification of institutional sector and Standard industrial classification are helpful in determining the level of administration and the area of service |

| Standard industrial classification | Number and description from the Norwegian Register of Legal Entities | |

| Level of administration | Level of administration the entity belongs to (state, regional (NUTS), local (LAU) or body governed by public law). | Commission Implementing Decision (EU) 2018/1524, Annex I (2.2.2) |

4.2.2. Data about the ICT solution (website or mobile application)

| Data Item | Description | Source of data requirement |

|---|---|---|

| Name of entity | Name of entity | |

| Organisation number | Number from the Norwegian Register of Legal Entities | |

| Address (geographic location) | Full address, detailing geographic location of the entity | Commission Implementing Decision (EU) 2018/1524, Annex I (2.2.1) |

| Classification of institutional sector | Number and description from the Norwegian Register of Legal Entities | It is not required by the Directive to collect this data, but the Classification of institutional sector and Standard industrial classification are helpful in determining the level of administration and the area of service |

| Standard industrial classification | Number and description from the Norwegian Register of Legal Entities | |

| Level of administration | Level of administration the entity belongs to (state, regional (NUTS), local (LAU) or body governed by public law). | Commission Implementing Decision (EU) 2018/1524, Annex I (2.2.2) |

4.2.2. Data about the ICT solution (website or mobile application)

| Data Item | Description | Source of data requirement |

|---|---|---|

| Address | Website URL or address/URL in mobile application store | |

| Name of ICT | Name or title of the ICT solution | |

| Type of ICT | Type of ICT solution (website, mobile application, possibly also specify intranets and extranets) Member States shall periodically monitor the compliance of websites and mobile applications. Intranet and extranet sites are not included in the scope (Directive (EU) 2016/2102 Article 8). | Directive (EU) 2016/2102, Article 1. Commission Implementing Decision (EU) 2018/1524, Annex I (1) |

| Area of service | Area of services provided by the entity, such as social protection, health, transport, education, employment and taxes, environmental protection, recreation and culture, housing and community amenities, public order and safety or other relevant types of classifications. | Commission Implementing Decision (EU) 2018/1524, Annex I (2.2.3) |

| Types of services (in-depth only) | A list of individual services provided by the ICT solution. | Commission Implementing Decision (EU) 2018/1524, Annex I (3.2) |

| Frequently downloaded? | For example, the number of downloads from Google Play and Appstore. This is not applicable to websites. | Commission Implementing Decision (EU) 2018/1524, Annex I (2.3.2) |

| Operating system | Operating system required to run the mobile application (e.g. Android, iOS or other). This is not applicable to websites. | Commission Implementing Decision (EU) 2018/1524, Annex I (2.3.3) |

| Last version | Version number of the last updated version of the mobile application. This is not applicable to websites. | Commission Implementing Decision (EU) 2018/1524, Annex I (2.3.4) |

| Prioritised by national stakeholders? | Have relevant stakeholders indicated the ICT solution as a priority for monitoring? | Commission Implementing Decision (EU) 2018/1524, Annex I (2.2.4) |

| Last monitored | Date for when the ICT solution was last monitored and the type of monitoring. | Commission Implementing Decision (EU) 2018/1524, Annex I (2.4) |

4.2.3 Data about the page (web page or screen in mobile application)

| Data Item | Description | Source of data requirement |

|---|---|---|

| Type of page | In-depth: Type of page, such as home, login, sitemap, contact, help and legal information, service, accessibility statement or policy, page containing the feedback mechanism, other or randomly selected page. Simplified: For simplified monitoring, only the home page needs to be specified. The other test pages do not need categorization. |

Commission Implementing Decision (EU) 2018/1524, Annex I (3.2) |

| Type of service (in-depth only) | Identification of the individual service web page, or screen in the mobile application, is connected to | Commission Implementing Decision (EU) 2018/1524, Annex I (3.2) |

| Process (in-depth only) | Indication of whether the page is a part of a process and a brief description of the process | Commission Implementing Decision (EU) 2018/1524, Annex I (1.2.2) |

| Address | URL or other description of the location of the page |

4.2.4. Data about downloadable document

Note: This section only applies to in-depth monitoring.

| Data Item | Description | Source of data requirement |

|---|---|---|

| Type of service | Identification of the individual service the downloadable document is connected to | Commission Implementing Decision (EU) 2018/1524, Annex I (3.2) |

| Process | Indication of whether the downloadable document is a part of a process and a brief description of the process | Commission Implementing Decision (EU) 2018/1524, Annex I (1.2.2) |

4.2.5. Data about the requirement (WCAG Success Criterion)

| Data Item | Description | Source of data requirement |

|---|---|---|

| Standard | Number and name of standard. | Directive (EU) 2016/2102, Article 6 |

| Version | Version number of the standard. | Directive (EU) 2016/2102, Article 6 |

| Requirement | Number, name of the requirement and conformance level in the standard | Directive (EU) 2016/2102, Article 6 |

| Principle in WCAG | Information about the corresponding main principle of WCAG (perceivable, operable, understandable, robust). | Directive (EU) 2016/2102, Article 6. Commission Implementing Decision (EU) 2018/1524, Annex I (1.3.2) |

| Guideline in WCAG | Information about the corresponding guideline in WCAG. | |

| Success Criterion | Number, name of the Success Criterion in WCAG 2.1 referred to in the standard | EN 301 549 V2.1.2 |

| User accessibility need | Mapping to functional performance statements (user accessibility needs) in EN 301 549. That is usage without vision, usage with limited vision, usage without perception of colour, usage without hearing, usage with limited hearing, usage without vocal capability, usage with limited manipulation or strength, the need to minimise photosensitive seizure triggers, usage with limited cognition. | Commission Implementing Decision (EU) 2018/1524, Annex I (1.3.2) |

4.2.6. Data about the test result for tested page

It follows from the EN 301 549 standard, that the check for compliance or non-compliance happens at the page level.

| Data Item | Description | Source of data requirement |

|---|---|---|

| Page or document | URL or other identification of web page (for website) or screen (for mobile application) tested. | |

| Requirement | Number, name and conformance level of the requirement in the standard, that was subject for test. | Directive (EU) 2016/2102, Article 6 |

| Test method | Information about test method, test rules, tool, test mode (automated, semi-automated, manual) and when the test method was last updated. | Commission Implementing Decision (EU) 2018/1524, Annex II (2.3 b, 1.2.4 and 1.3.3) |

| Compliance status | Status of compliance or non-compliance of the tested page based on outcome of the tested elements. Any failed elements results in non-compliance, while all passed, inapplicable or untested elements results in compliance. | Commission Implementing Decision (EU) 2018/1524, Article 5 |

| Failed elements | Number of failed elements found on the page. | Commission Implementing Decision (EU) 2018/1524, Article 7 |

| Date tested | Date the test was performed. | |

| Tested by | Name of the entity or monitoring body that performed the test. We register this in case we in (a later version of) the accessibility statement will collect the test data from the entities and therefore need to be able to identify which tests have been performed by the entity, and which are performed by the monitoring body. | Commission Implementing Decision (EU) 2018/1524, Annex I (1.2.5) |

4.2.7. Data about the test result for tested element

In addition to the page level, we also wanted to explore the opportunity to produce test results at the element level. The term “element level” can be explained as the individual components or content elements that are tested, e.g. a form element, a picture, a table, or even the entire page, dependent on which element the test rules apply to. In our opinion, this will help the public sector bodies in correcting the errors found on their websites.

| Data Item | Description | Source of data requirement |

|---|---|---|

| Tested element | Identification of the applicable element that has been tested on the page | Commission Implementing Decision (EU) 2018/1524, Article 7 |

| Page or document | URL or other identification of web page (for website) or screen (for the mobile application) tested. | |

| Requirement | Tested requirement (Success Criterion in WCAG 2.1). | |

| Outcome | The outcome of each individual test:

|

Commission Implementing Decision (EU) 2018/1524, Article 7 |

| Date tested | Date the test was performed. | |

| Tested by | Name of the entity or monitoring body that performed the test. We register this in case we in a later version of the accessibility statement will collect the test data from the entities and therefore need to be able to identify which tests have been performed by the entity and which are performed by the monitoring body. |

Commission Implementing Decision (EU) 2018/1524, Annex I (1.2.5) |

4.2.8. Data about monitoring and reporting

| Data Item | Description | Source of data requirement |

|---|---|---|

| Monitoring method | The type of monitoring performed (simplified or in-depth) | Commission Implementing Decision (EU) 2018/1524, Article 5 |

| ICT solutions monitored | Which typed of ICT solutions have been monitored (websites or mobile applications) | Commission Implementing Decision (EU) 2018/1524, Article 8 (1) |

| Reporting period start | Start date of the reporting period. | Directive (EU) 2016/2102, Article 8 (4) |

| Reporting period end | End date of the reporting period. | Directive (EU) 2016/2102, Article 8 (4) |

| Monitoring period start | Start date of the monitoring period. | Commission Implementing Decision (EU) 2018/1524, Article 2 (2), Annex II (2.1 a) |

| Monitoring period end | End date of the monitoring period. | Commission Implementing Decision (EU) 2018/1524, Article 2 (2), Annex II (2.1 a) |

| Body in charge of monitoring | The authority body responsible for the monitoring | Commission Implementing Decision (EU) 2018/1524, Article 2 (2), Annex II (2.1 b) |

| Requirements tested | The requirements in EN 301 549 that are verified/checked in the monitoring | Commission Implementing Decision (EU) 2018/1524, Annex I (1.3.1) |

| Sample size simplified monitoring | Number of websites monitored in simplified | Commission Implementing Decision (EU) 2018/1524, Annex I (2.1 |

| Sample size in-depth monitoring | Number of websites monitored in-depth | Commission Implementing Decision (EU) 2018/1524, Annex I (2.1 |

| Sample size in-depth mobile applications | Number of mobile applications monitored in-depth | Commission Implementing Decision (EU) 2018/1524, Annex I (2.1 |

4.3. WCAG Success Criteria, ACT Rules and test tools

Member States shall align the monitoring according to the requirements stated in the standards and technical specifications referred to in Article 6 of Directive (EU) 2016/2102:

- an in-depth monitoring method that thoroughly verifies whether a website or mobile application satisfies all the requirements.

- a simplified monitoring method that detects instances of non-compliance on a website with a sub-set of the requirements (…) reasonably possible with the use of automated tests.

Thus, a part of the planning process is to determine which Success Criteria to be included in the simplified monitoring and how to perform the tests in both the simplified and in-depth monitoring.

4.3.1. tools used in the pilot

A part of the pilot was to perform the tests using the tools of the project partners. In December 2019 we met with the project partners Deque, FCID, and Siteimprove to ascertain which test tools to use in the pilot monitoring. Further information about the tools can be found in the appendix.

The ACT Rules developed in the project and the implementations in the tools are work-in-progress. Even though the ACT Rules were completed by the project, they do not necessarily meet the ACT objectives for consistency yet. Still, we decided to use the ACT Rules at the time of the pilot, since the objective was to try out the different steps in the monitoring process, rather than a verification of the actual ACT Rules and their implementations.

A test rule implementation means the way a test rule is interpreted and operationalized when incorporated in a test tool. A single implementation can test multiple ACT Rules. A tool or methodology can also have multiple implementations that when combined, map to a single ACT Rule.

The tools were used in their current state of development at the time of testing in the pilot. Due to the documentation provided by the tool vendors, the test rules referred to in this report was completed by the project and implemented in the tools. During the pilot, we kept in contact with the project partners, in case of questions or need for support. The tools were at the Norwegian Digitalisation Agency’s disposal, free of charge for the duration of the pilot, including access to relevant supporting documentation.

One of the tools was not used in the simplified monitoring, as the tool did not have a crawler for sampling test pages when the pilot was performed.

4.3.2. WCAG Success Criteria covered by the pilot

In the pilot, we were restricted to cover Success Criteria that had accompanying ACT Rules that were completed and implemented in the project partners’ (Deque, FCID, and Siteimprove) tools at the time of testing (January/February 2020). Therefore, we included altogether 19 ACT Rules in the pilot. This applies to both simplified and in-depth monitoring. The WAI-Tools project is moving forward, and the goal is to develop 70 ACT Rules by the end of October 2020. The rules developed by the project are continually submitted to W3C for further review and final approval as W3C ACT Rules. We expect that implementations of these formally published rules will be more stable and consistent.

The selected Success Criteria cover the requirements of perceivability, operability, understandability, and robustness.

Based on

- which Success Criteria that were covered by ACT Rules in the tools at the time of the pilot,

- the four principles in WCAG and

- what user accessibility needs that shall be considered,

we have selected the following 13 Success Criteria for the pilot:

- 1.1.1 Non-text Content

- 1.2.2 Captions (Prerecorded)

- 1.2.3 Audio Description or Media Alternative (Prerecorded)

- 1.3.1 Info and Relationships

- 1.3.4 Orientation

- 1.3.5 Identify Input Purpose

- 2.2.1 Timing Adjustable

- 2.4.2 Page Titled

- 2.4.4 Link Purpose (In Context)

- 3.1.1 Language of Page

- 3.1.2 Language of Parts

- 4.1.1 Parsing

- 4.1.2 Name, Role, Value

The following user accessibility needs (or Functional Performance Statement) have a primary relationship to the Success Criterion included in the pilot:

- Usage without vision

- Usage with limited vision

- Usage without hearing

- Usage with limited hearing

- Usage with limited manipulation or strength

- Usage with limited cognition

The Directive does not specify whether the Success Criterion must have a primary relationship to the user accessibility need. If the secondary relationship between the Success Criterion and the user accessibility needs is considered, we also cover usage without vocal capability.

Due to the restriction of covering Success Criteria that had accompanying ACT Rules, which were completed and implemented in all three tools at the time of testing, the user accessibility needs of usage without the perception of colour and the need to minimize photosensitive seizure triggers were not covered. The project is taking all the user accessibility needs into consideration when deciding which ACT Rules to prioritize.

There are additional Success Criteria that may be relevant to include in a simplified monitoring. Based on results from the Norwegian Digitalisation Agency’s (then Difi – the Agency for Public Management and eGovernment) monitoring in 2018 and supervision in 2019, we have identified several Success Criteria in WCAG 2.0 with a high-risk of uncovering errors on the websites tested.

Some examples of Success Criteria in addition to those included in the pilot, that also should be included in a monitoring are:

- 1.4.3 Contrast (Minimum)

- 2.2.2 Pause, Stop, Hide

- 3.3.1 Error Identification

- 3.3.2 Labels or Instructions

At the time of testing (February 2020), there are ACT Rules in development for Success Criterion 1.4.3, 2.2.2 and 3.3.1, but these ACT Rules have not yet been implemented in the tools. New ACT Rules are continually being developed and implemented, and coverage will increase with time.

4.3.3. ACT Rules used in the pilot

The ACT Rules developed in the WAI-Tools project are all connected to the Success Criteria in WCAG.

Since the pilot was constrained by the development and implementation of test rules in the project partners’ tools, the following 19 ACT Rules were used in both simplified and in-depth monitoring:

ACT Rules were used in both simplified and in-depth monitoring:

| Success Criterion | ACT Rule ID | ACT Rule name |

|---|---|---|

| 1.1.1 | 23a2a8 | Image has accessible name |

| 1.1.1, 4.1.2 | 59796f | Image button has accessible name |

| 1.2.2 | eac66b | Video element auditory content has accessible alternative |

| 1.2.3 | c5a4ea | Video element visual content has accessible alternative |

| 1.3.1, 4.1.2 | 6cfa84 | Element with aria-hidden has no focusable content |

| 1.3.4 | b33eff | Orientation of the page is not restricted using CSS transform property |

| 1.3.5 | 73f2c2 | Autocomplete attribute has valid value |

| 2.2.1 (2.2.4, 3.2.5 at level AAA) | bc659a | Meta element has no refresh delay |

| 2.4.2 | 2779a5 | HTML page has title |

| 2.4.4, 4.1.2 (2.4.9 at level AAA) | c487ae | Link has accessible name |

| 3.1.1 | b5c3f8 | HTML page has lang attribute |

| 3.1.1 | 5b7ae0 | HTML page lang and xml:lang attributes have matching values |

| 3.1.1 | bf051a | HTML page language is valid |

| 3.1.2 | de46e4 | Element within body has valid lang attribute |

| 4.1.1 | 3ea0c8 | Id attribute value is unique |

| 4.1.2 | 97a4e1 | Button has accessible name |

| 4.1.2 | 4e8ab6 | Element with role attribute has required states and properties |

| 4.1.2 | e086e5 | Form control has accessible name |

| 4.1.2 | cae760 | Iframe element has accessible name |

Over time as the project proceeds and the number of ACT Rules increases, the project and the ACT Rule community are building a library of commonly accepted rules. This will be of great importance for the Member States, due to the need for a well-documented and transparent interpretation of the accessibility requirements as a basis for monitoring.

4.4. Learnings

The findings and learning points from all the aspects of the planning are summarized below:

- It is crucial to analyse the Directive to identify requirements for monitoring and reporting.

- Before starting the test and other data collection, we must define, as precisely as possible, which research questions to be investigated, and then ensure that we collect all the data needed for analysis and reporting.

- The requirements for monitoring and reporting and the list of research questions underlies decisions on the following:

- The sample of public sector bodies/entities, web solutions, test object/pages etc. This applies to the size and composition of the sample, and the selection method for entities, web solutions and pages.

- The monitoring methods, tools, and test mode (automated, semi-automated, manual)

- For simplified monitoring: Which Success Criteria shall be included, especially considering that automated test is preferable.

- Data needs, the methods and for data collection and the data sources

- Which analysis that must be performed in order to report in alignment with the Directive

- In this pilot:

- We used 19 ACT rules that covered 13 WCAG 2.1 Success Criteria. In our opinion, it is of vital importance that this work on ACT rules development proceeds until all the accessibility requirements in the Directive are covered. This is due to the need for a documented, transparent, and commonly accepted test method.

- We met the requirements for simplified monitoring by covering the 4 principles in WCAG as well as 7 of 9 user accessibility needs.

- Since we used the same ACT Rules and Success Criteria in both simplified and in-depth monitoring, we covered 29 % of the Success Criteria required by the Directive (for the in-depth monitoring).

- Even though we had a somewhat limited scope regarding the number of requirements included, we came close to meet the minimum requirements for simplified monitoring. However, based on findings in previous monitoring in Norway, there were several high-risk Success Criteria that were not covered in the pilot. This was because neither the development of test rules nor the implementation of test rules were completed when the pilot testing was performed.

- However, high-risk criteria are continuously being addressed in the project. Regardless of this, one should be aware of that the election (and exclusion) of Success Criteria in the monitoring, may imply that there could be significant accessibility problems that are not uncovered in the monitoring. This applies, especially for simplified monitoring.

- In the foreseeable future, we consider that there will be a need to supplement automated tests with both semi-automatic and manual tests to cover all the Success Criteria and requirements in the standard. This applies, especially for the in-depth monitoring.

Especially when planning the first monitoring and reporting:

- There is a comprehensive need to collect and store data in both simplified and in-depth monitoring, as described in chapter 4.2. The data must be collected from diverse data sources. We need to establish a data model to structure the data, in order to facilitate efficient data storage and retrieval. This data model is to some extent built on the open data format for accessibility test results that were developed by the project and implemented in the tools as one of the output formats.

- We plan to use the accessibility statements to collect structured data about the public sector bodies, web solutions, area of services, and individual services per entity. Later, we will consider combining the accessibility statements with automated tests that the entities can perform themselves. Part of the WAI-Tools project is to develop a prototype large-scale data browser, which would collect and analyse data from the accessibility statements. However, this was not available at the time of carrying out the pilot and may be considered in the future.

- However, it should be considered whether the requirements for monitoring and reporting are too extensive, especially when it comes to data needed to compose the samples of entities, web solutions, and pages, in line with the Directive (as described in Chapter 4.2.1-4.2.3). This applies in particular to the number of services, processes, pages, and documents that shall be tested in the in-depth monitoring. It should be considered if a selection of services may be enough, instead of monitoring them all.

In addition, we have identified a set of criteria that should be considered when selecting a tool for testing (and producing test results) in real monitoring:

- The coverage of WCAG, i.e. the tool should cover as many requirements/Success Criteria as possible

- The tool should as far as possible secure the needs for transparency, reproducibility, and comparability, thus

- it must be based on a documented interpretation of each of the requirements in the standard

- as far as possible, be based on the ACT Rules, as they meet the need for a documented, transparent and commonly accepted test method

- the test rules (and the way they are implemented in the tools) must be documented in order to show what interpretation of the requirements that are covered by each test

- the tool should include or be combined with a crawler that is suitable for sampling most of the pages and content that should be included in a monitoring

- the test results should specify the outcome of the tests like passed, failed, inapplicable (and not tested)

- the tool must give test results both on the element and the page level, specified per success criteria

- the tool should preferably give test results both on the page and the element level, specified per success criteria

- the number of tested elements and pages especially failed elements and pages, should be counted and identified, per success criteria and in total

- the test results should be in a format suitable for analysis and reporting in line with the Directive and provide the web site owners/the public sector bodies with the necessary information in their work for improving their websites

5. Step 2: Sampling

In this chapter, we present the experiences we made when selecting a sample of public sector bodies, websites, and pages. The aim is to document methods and sources of data for selecting public sector bodies, websites, and webpages, in order to be prepared to create a sample in a real monitoring in line with the Directive. The Directive also describes the sampling criteria for mobile applications. The actual data we collected through the sampling, are further presented in chapter 6.

Note: Due to the scope of the pilot, we have sampled and monitored websites only.

5.1. Public sector bodies and websites

The number of websites (and mobile applications) to be monitored in each monitoring period shall be calculated based on the population of the Member State.

Based on a population of 5 367 580, the monitoring in Norway shall include the number of websites listed in the table below:

| Monitoring method | Year 1 and 2 (websites) | From year 3 and then annually (websites) |

|---|---|---|

| Simplified | 182 | 236 |

| In-depth | 19 | 22 |

The sampling of websites shall:

- aim for a diverse, representative and geographically balanced distribution

- cover websites from the following levels of administration

- state websites

- regional websites

- local websites

- websites of bodies governed by public law